I was eight years old when my father graduated from college. He’d spent the better part of a decade working all day, going to school at night, and trying to manage three young boys somewhere in-between. I don’t remember when he found the time to do homework but I do remember that he had these really interesting magical-seeming books on his shelf or on his desk or on the kitchen table. Most of them were big and heavy with glossy pages and lots of pictures. Somewhere around the time I was nine or ten, my father handed me the smallest of these books, a thin, yellowed volume about the size and shape of a paperback and told me I should try reading this one. It was filled with many weird symbols and lots of black-and-white sketches of shapes and curves. It was called something like “Algebra and Trigonometry,” neither of which were words that I knew. He also gave me one of these:

And showed me how to use it to measure these things called “angles.” I remember leafing through this book and coming upon this really neat “fact,” namely that the sum of the measure of the angles in any triangle is 180 degrees. Now, this was interesting! I knew what a triangle was, I knew how to measure angles, and I knew how to add. This was something I could explore! So, dutifully, I began sketching triangles in my notebook, measuring the angles, and adding up the results. And, I quickly discovered something quite odd – my measurements didn’t always add up to 180. Sometimes I’d get 179.5 or 178 and sometimes 181 or 182. What, I wondered, was going on here? Was this magical book wrong? Had I discovered something new?

I remember showing my results to my father and him telling me it was my measurements that were wrong and imprecise. I wondered how he knew this and he explained that we don’t know that the sum is always 180 because we keep measuring triangles and discover this fact, but rather, we know this “fact” because of this thing called “proof.” It would be many, many years before I’d really start to understand this distinction but it’s this distinction I want to talk about here today and ultimately relate it to questions that seem to be swirling around concerning mathematical modeling and the notion of “real-world.”

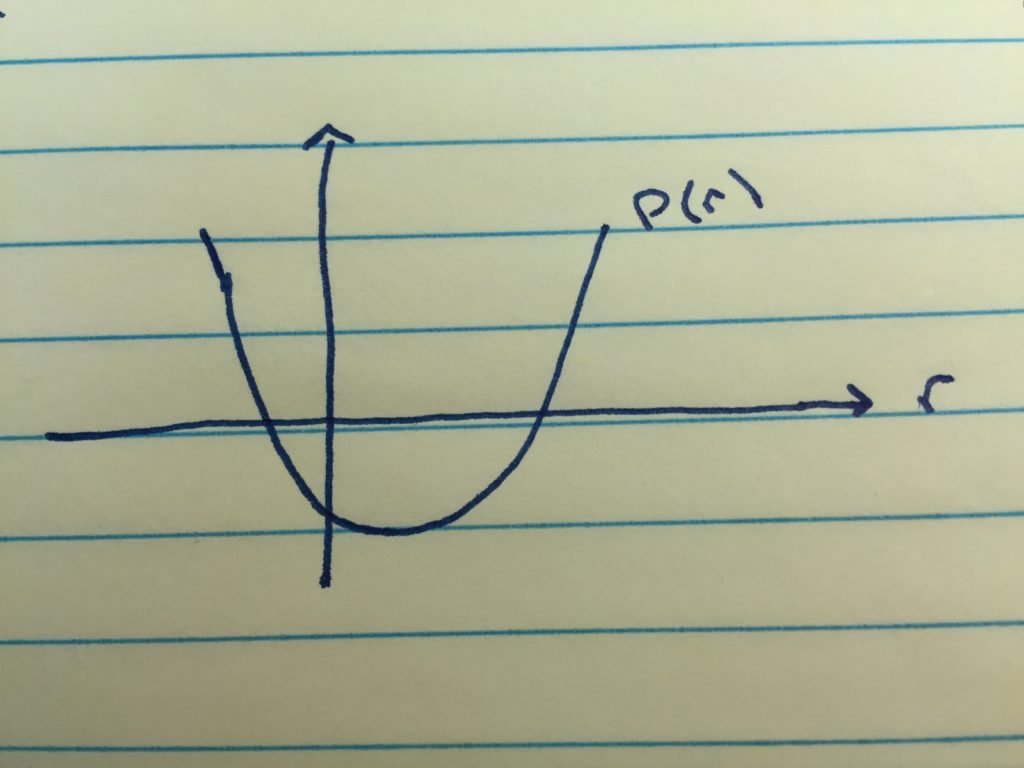

Let’s continue to think about triangles. Here’s a triangle:

Now, the problem is that I’ve just lied to you. The thing that you’re looking at isn’t actually a triangle at all. Rather, the thing you’re looking at is a representation of the abstract mathematical concept of “triangle.” This is a fine distinction, but an important one. Triangles don’t actually “exist” in the same sense that my cat exists, or the sun exists, or a bottle of water exists. A triangle only exists as this abstract object that is brought to life by a mathematical definition. One example of such a definition is:

A triangle is a polygon that has precisely three sides.

Like all good definitions, this tells us the class of objects that our new object (triangle) belongs too, namely the class of things called “polygons,” and it tells us the specific difference between our new object and other elements of the class, “has precisely three sides.” The picture above is not a triangle, but rather is a picture of what one of these abstract things we call “triangle” might look like if we attempted to visualize it. But, and this is important, triangles themselves simply do not exist anywhere in this place we live, this place we call “the universe.” We can’t point to a triangle, we can’t draw one, we can’t pick one up, touch one, or taste one. They only exist in this abstract world that we call “mathematics.”

But, you ask, are triangles real? And, this is where the confusion begins. This is not an easy question. Plato believed that they were real and that somewhere there existed, in the same sense as my cat exists, this “abstract world of forms” populated by things like triangles and continuous functions and notions of “catness.” Plato believed that this abstract world of forms was the primary reality and that the world of substance and matter which we inhabit was but a mere shadow of this abstract world.

Of course, Aristotle disagreed and argued that no such world of forms existed and that our world of substance was the primary reality, that is, it was the world that my cat inhabits that is actually “real.” Plato and Aristotle’s disagreement sits in the center of the famous picture, “School of Athens” by Sanzio, which hangs today in the Vatican. Plato, on the left, points upward toward his “world of forms,” while Aristotle, on the right, points forward to what’s in front of us.

Now, what does all this have to do with mathematical modeling? Let’s go back to a definition of mathematical modeling put forth in the GAIMME report:

Mathematical modeling is a process that uses mathematics to represent, analyze, make predictions, or otherwise provide insight into real-world phenomena.

There is much debate and angst that arises around the very last part of that definition, namely the use of the term “real-world.” Some argue that triangles are “real” to children in a way that irrigation systems are not and hence conclude that doing any form of mathematics is doing mathematical modeling. This is, of course, where one falls into the trap of equivocation. In arguing in this way, one changes the meaning of “real-world” from indicating Aristotle’s world of substance and things to meaning “familiar to me whether it’s part of the world of substance or not.” This equivocation is the challenge and the fault. We need to use “real-world” in the sense that it’s intended by those who state definitions such as are found in the GAIMME report. We can’t arbitrarily change that meaning any more than we can change the meaning of “polygon” in our definition of triangle to include objects with curved sides and still expect our definition of “triangle” to make sense.

But, does this really matter? What’s lost if we change the meaning of “real-world” to be a subjective one that means “anything with which I’m familiar”? This is the truly important part. In making that shift, all is lost. All that is special, and powerful, and unique about this practice called “mathematical modeling” is dependent upon this connection to the “real world,” where here, the “real world” means the physical world, the natural world, Aristotle’s world of substance, or what we commonly refer to as the “universe.” The magic of mathematical modeling is that it connects Plato’s abstract world of forms (whether you believe in it or not) to Aristotle’s world of substance.

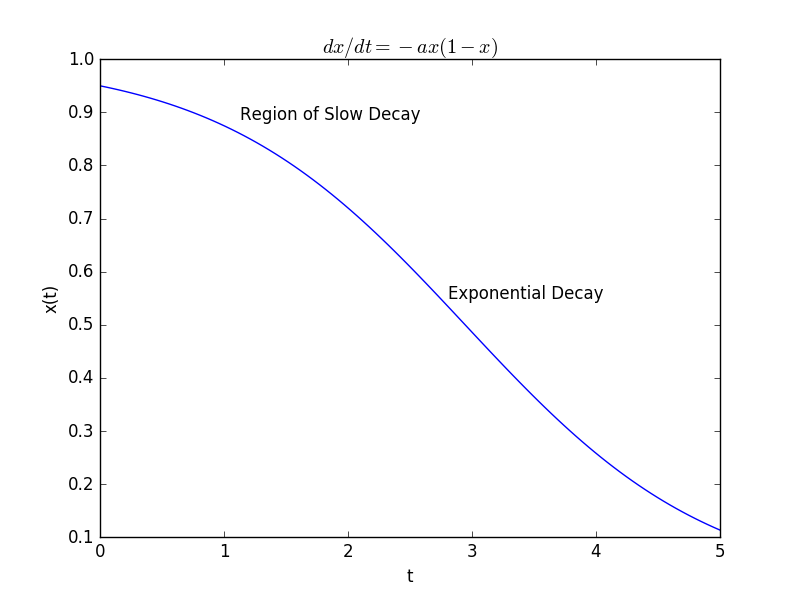

Mathematical modeling allows us to use the things we discover in the abstract world of mathematics to understand things in this other place, this space we inhabit, this non-abstract world of cats, and water bottles, and irrigation systems. The heart of mathematical modeling is the ability to bridge that gap, to make those connections, and to learn how to connect and use the abstract world to understand the world we inhabit. It’s the skills that are needed to work in that gap that is the new thing about teaching and learning mathematical modeling and that’s what we lose if we equivocate and redefine “real-world.”

The fact is that it is working in that gap that requires a new set of skills. And it’s this fact that makes the teaching and learning of mathematical modeling new and challenging. One must have an understanding of and be able to operate in the “mathematical world.” But, one must also have an understanding of and be able to operate in the “real world.” One must know or learn things about physics, or chemistry, or sociology, or farming, to be able to connect what they know in the mathematical world to this real world. One must learn how to make these connections, how to attach which abstract notions of mathematics to which phenomenon in this space we inhabit. One must learn the strengths and limitations of such connections and how to test them. Engaging in this practice or process and doing so in order to understand or make predictions about the “real world” is what we call “mathematical modeling.”