In a previous post we introduced the idea of a “toy model” in the context of investigating pollution in the Great Lakes. Today, I want to talk more about this idea of a “toy model,” explore a simple toy model that you can introduce in your classroom, and point you toward one resource that’s full of such ideas for students at the middle and high school level.

When a modeler approaches a problem in the real-world, they generally encounter something that is complicated, messy, and hard to get a handle on. It’s not clear what’s important and what’s not. It’s not clear what’s hidden underneath what can be observed and it’s often not clear where to even begin trying to understand what they’re seeing. There are many different approaches that modelers use when they find themselves at this point with a new problem. They may recall an analogous situation that seems similar enough to this new situation and use that observation as a starting point, trying to understand what’s different between what they see and what’s familiar. They may start with data and try and see what trends they notice or what patterns they can see from studying the data. Or, they might employ the mathematician’s strategy of what to do when faced with a problem where you don’t even know where to start – replace it with a simpler problem that you can solve but that is close enough to your original problem that you think you might learn something about solving the tough problem. When modelers use this strategy, they often say what they are doing is “considering a toy model” or “playing with a toy model.”

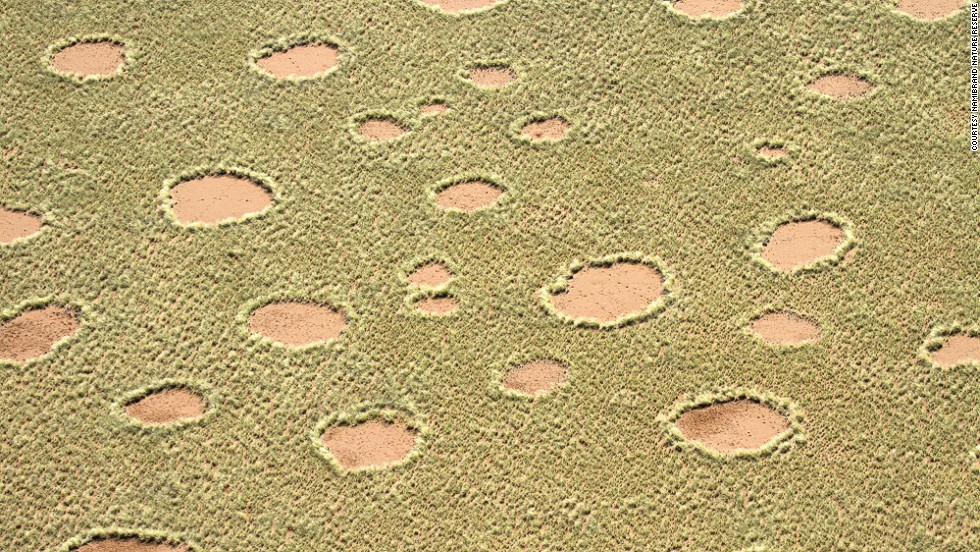

This strategy often leads to incredibly rich mathematics and insight into the real world problem. While you may still be many steps away from fully understanding the real world system, you’ve often found that path where at the very least you can start your journey. One beautiful example of this is what’s know as the Renyi Parking Problem. The Hungarian mathematician Alfred Renyi first posed this problem as a toy model of the more complicated problem of random packing. Random packing situations arise in many areas of scientific and industrial interest. When scientists investigate how molecules bind to the surface of some object, it’s really a random packing problem. When you ask the question “How many jellybeans are in this jar?” you are really asking a random packing question. The basic idea is simple – if you randomly place objects in some confined region of space, how much of that space will you fill up? What happens if those objects can push other objects that are already there around? What happens if sometimes an object leaves that space? You can imagine that the problem in any particular application can quickly seem complicated and overwhelming.

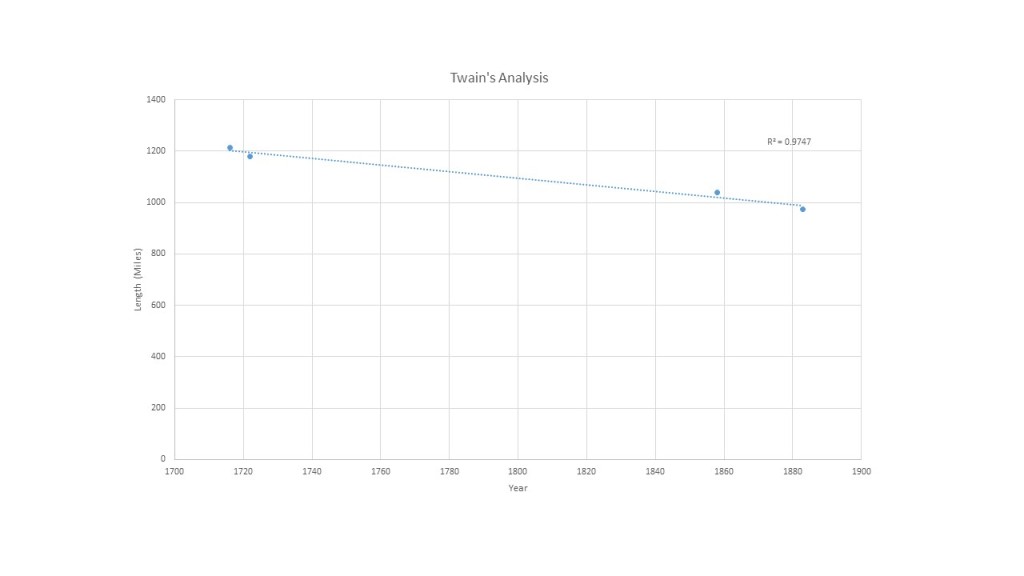

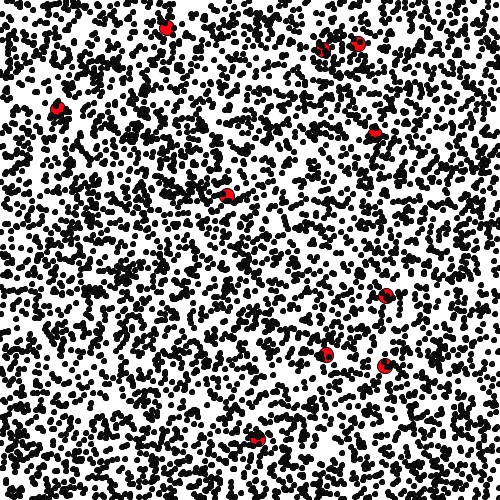

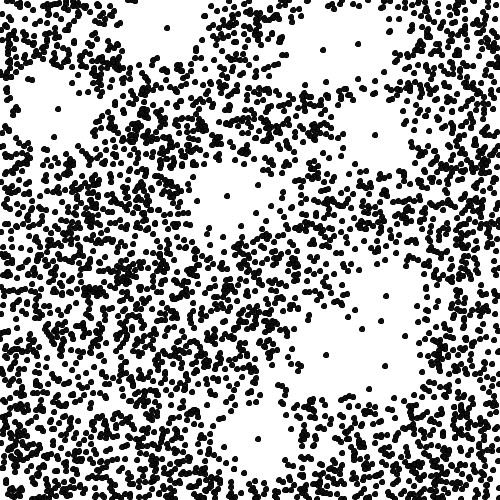

Renyi posed a simple to understand, but mathematically rich, toy model of random packing. He imagined a long street of some length, say L, where cars of unit length were allowed to park. He then asked “what happens if the cars park randomly in any unit interval that’s not already occupied along this street?” So, there’s no parking spaces, and the drivers are discourteous and don’t try and park close to other cars. The story of the investigation of this problem and open questions that still remain about it is quite interesting. But, here what we want to observe is what Renyi did. He didn’t take any particular real-world problem and make assumptions and abstractions and try and get to a problem he could mathematize. Rather, he simply posed a problem that he could easily mathematize, but had the “flavor” of the phenomena he as trying to understand. That’s the essence of constructing a toy model and part of the art of mathematical modeling. Often the modeler has to be able to “see through” all of the real-world complications to some underlying “toy” system that can be grasped, mathematized, and understood.

The book Adventures in Modeling by Colella, Klopfer, and Resnick is chock-full of examples of toy models that can readily be investigated in the classroom. The book focuses on exploring complex, dynamic systems and hand-in-hand introduces the reader to using StarLogo as a simple simulation tool. StarLogo is one of those programming environments that was specifically designed to be easy for students to learn and to serve as an entry point into computer programming. But, even if you ignore the StarLogo part of the book, the problems and the toy models introduced are alone worth the price.

One activity that they explore is called “Foraging Frenzy” and it’s a nice one for exploring mathematical modeling, toy models, and connecting your math classroom to biology and ecology. The underlying ecology problem is a central one to the field. How do you predict what an animal will do when foraging for food? You can imagine how complicated such a situation can get in context! Suppose we’re talking about field mice. How far will they roam? How will they decide? What happens if there are predators in the environment? How does their behavior depend on the season? Thought about in context, the problem is certainly one of those that can feel overwhelming. I’d even argue that it is one of those problems that if given directly to a group of students might very well end up with students saying “You can’t possibly predict what an animal will do when foraging for food!” So, what we often end up doing as teachers is just telling our students what happens and removing the whole exploration part from these complex problems. This is where I believe toy models can be very useful in the classroom.

What Colella et. al. do is to introduce a toy “foraging model” that does feel tractable and does feel like one where students can start seriously exploring and thinking about how to model. They say this – buy a big bag of dried kidney beans, get three stopwatches and a piece of paper. Now, assign two students to be “food givers” and give them each half of the beans and a stopwatch. Have them sit close to one another and secretly tell each of them the rate at which they are to give out their beans. For example, tell one student to give out a bean every five seconds and the other every fifteen seconds. Now, tell the rest of your students that their job is to get as many beans as they can, but they have to follow a few rules. They have to stand in line in front of one of the “food givers” and take a bean when it is given to them. They are allowed to switch lines at any time, but always must move to the back of the other line. After they get a bean they also must move to the back of one of the lines. Now, let them go! In the meantime, you’re using your stopwatch to gather data. Note the number people in each line at regular time intervals, say every 30 seconds. Let the whole process run for 5 minutes and then share what you’ve recorded with the class.

What you have presented students with is a really simple, accessible “toy model” of foraging behavior. It’s one for which you have data, is one that’s more manageable in scope, but also captures the essential features of the real-world ecological problem. Now, it’s time to discuss and think about modeling. If your students behave like many animals in the natural world, what you’ll see is that the length of lines becomes proportional to those rates of distribution that you set at the beginning. That’s something that’s called “Ideal Free Distribution” theory and is the basis for making those predictions about what a foraging animal will actually do.

I encourage you to make use of toy models liberally in your classroom as you introduce the notion of mathematical modeling. Let us know if you try this one out or come up with other neat “toy models.” We’d love to hear from you.

John